In my post “Strategic Tunnel Vision”, I touched on the concept of capability. I discussed how focusing on new capabilities can crowd out existing capabilities and the detrimental effects of that when those existing capabilities are still necessary. I also spoke to how choices about strategic capabilities can trickle down to effect tactical capabilities.

What I failed to do, however, was define what was meant by the term “capability”. That’s a pretty big oversight on my part, because, in my opinion, understanding the concept is critical across all levels of architectural concerns.

Tom Graves, in his “Definitions on capability”, defines the term (along with some related concepts):

— Capability: the ability to do something.

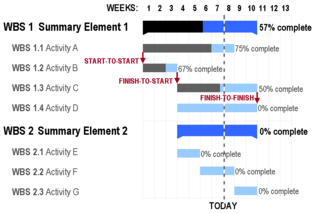

— Capability-based planning: planning to do something, based on capabilities that already exist, and/or that will be added to the existing suite of capabilities; also, identifying the capabilities that would be needed to implement and execute a plan.

— Capability increment: an extension to an existing capability; also, a plan to extend a capability.

— Capability map: a visual and/or textual description of (usually) an organisation’s capabilities.

Yes, I do know that those definitions are terribly bland and generic – and they need to be that way. That’s the whole point: they need to be generic enough to be valid and usable at every possible level and in every possible context – otherwise we’ll introduce yet more confusion to something that’s often way too confused already.

That last paragraph is critical. The concept of “capability” is a high-level one that is useful across multiple levels of architectural concern (ie. application, solution, enterprise IT, and the enterprise itself). Quoting Tom again:

Note what else is intentionally not in that definition of ‘capability‘:

- there’s no actual doing – it’s just an ability to do something, not the usage of that ability

- there’s no ‘how’ – we don’t assume anything about how that capability works, or what it’s made up of

- there’s no ‘why‘ – we don’t assume any particular purpose

- there’s no ‘who‘ – we don’t assume anything about who’s responsible for this capability, or where it sits in an organisational hierarchy or suchlike

We do need all of those items, of course, as we start to flesh out the details of how the capabilities would be implemented and used in real-world practice. But in the core-definition itself, we very carefully don’t – they must not be included in the definition itself.

The reason why we have to be so careful and pedantic about this is because the relationship between service, capability, function and the rest is inherently recursive and fractal: each of them contains all of the others, which in turn each contain all of the others, and so on almost to infinity. If we don’t use deliberately-generic definitions for all of those items, we get ourselves into a tangle very quickly indeed – as can be seen all too easily in the endless definitional-battles about the relationships between ‘business-function’ versus ‘business-process’ versus ‘business-service’ versus ‘business-capability’ and so on.

In short, it’s a crucial building block in our designs and plans (which is redundant, since design is a form of planning). If we don’t have and can’t get the ability to do something, it’s game over. However, as Tom noted, we need to move beyond the raw ability in order to make effective use of capabilities. We need to think timing and personnel (which will probably largely drive timing anyway). A capability later may well not be as valuable as the same capability right now.

This was brought to mind while skimming a book review on a military strategy site (emphasis added by me):

In March 2015, then-Chief of Staff of the U.S. Army General Raymond T. Odierno admitted to the British newspaper The Telegraph that the so-called special relationship between the United States and Great Britain isn’t what it used to be. “In the past we would have a British Army division working alongside an American army division,” he said, but he feared that in the future British battalions and brigades would have to operate “inside” American units. “What has changed,” Odierno declared, “is the level of capability.”

Later that week, I asked a senior British general about Odierno’s remarks. He replied, deadpan, that although Odierno’s candor was appreciated, his statement was factually incorrect. “We can still field a division,” the general insisted. “It is just a question of how long it takes us to field one.” Potential tanks, he seemed to think, were just as relevant as an actual ones.

The highlighted portion of the quote illustrates my point. Having the capability to do something immediately and the capability to do that same thing at some point in the future are not equivalent (just to be fair to the British Army, the US Army was in this same position during Operation Desert Shield – the initial ground forces that could be deployed were extremely thin). Treating them as equivalent potentially risks disaster.

It should be noted, however, that level of concern will color the perception of the value of a future capability versus a current one. At the tactical level, in business as well as in war, “…first with the most…” is likely a winning move. At the strategic level, however, where resources must be budgeted across multiple initiatives, priorities should dictate which capabilities get preference. Tactical leaders may have to be satisfied with “on time with just enough”.

Regardless of level, a clear assessment of capabilities, what’s available when, is key to making effective decisions.