I try to be disciplined about my writing (picking themes, creating a backlog, collecting notes and links on those topics, etc.), but it seems like serendipity won’t be denied, no matter what I do. On the same day that XKCD published this cartoon, Erik Dietrich published “Software Architect as a Developer Pension Plan”. While I agree with a lot that Erik says in the post, I think he also gets into a “throwing out the baby with the bathwater” situation by dismissing the need for the software architect role, part of which the cartoon illustrates.

Before I go any farther, I do need to point out that I’ve been following and interacting with Erik for about four years now. It’s a friendly disagreement; meant to be more debate than argument.

In the post, Erik makes the point (which I largely agree with) that companies see the role of software architect through a Taylorist lens. They see the software architect as a “thinker”, meant to drive a herd of “doers”:

The modern corporation, like Taylor before it, loosely divides into three categories of humans: owners/executives, managers, and grunts. Owners own and charge executives with executing their will. Executives delegate to managers. Managers think and assemble the workers into org charts designed to optimize efficiency. Workers keep their simple heads down and do as they’re told.

Theoretically, proponents would say, this applies to any domain and type of work. Corporations form themselves into these pyramids without considering any other options, whether they’re private military outfits, gigantic manufacturing facilities, or companies designing and selling software. Of course, the reality turns out to be that not all operations do well splitting thinking and labor. Let’s pick one at random. Oh, I dunno, say, writing software.

We try it. We hire junior developers to be directed by senior ones. And all of them fall in line under the watchful guise of a “tech lead,” who defers to a council of architects. And somewhere at the center of the organizational maelstrom stands The Lead Architect, directing elaborate bucket marches, water flows, and all sorts of magic, like Mickey Mouse in Fantasia. It’s a beautiful, cascading symphony of work flowing from the cleverest down to the simplest. Or… at least, that’s how it goes on paper.

Erik rightly argues that this model is fatally flawed. This is doubly so in the case of the example he gives, an individual offered a position as a software architect doing the thinking for a set of offshore “commodity” developers. Offshore development can be done well (that’s a post I’ll save for another day), but not using that model. Where Erik goes wrong, in my opinion, is in adopting this flawed definition of the software architect role.

Developers should be perfectly competent to do their own thinking. If they’re not, no software architect will be able to help. Even if that person were capable of handling the load of providing detailed directions for several developers, doing so would prevent them from attending to their own areas of concern. It would require being able to anticipate and make all the functional design decisions up front, as well as communicating them to multiple “typists” quicker than they could mindlessly hammer out the code, while still having time to consider all the requisite cross-cutting, quality of service considerations for an application. Do we really need to bother with experiments to determine that this is beyond improbable?

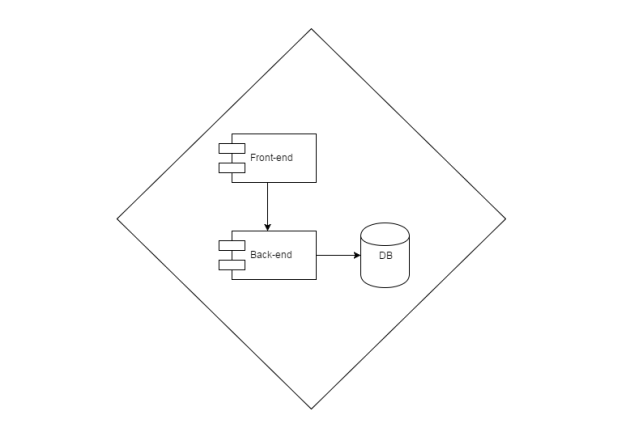

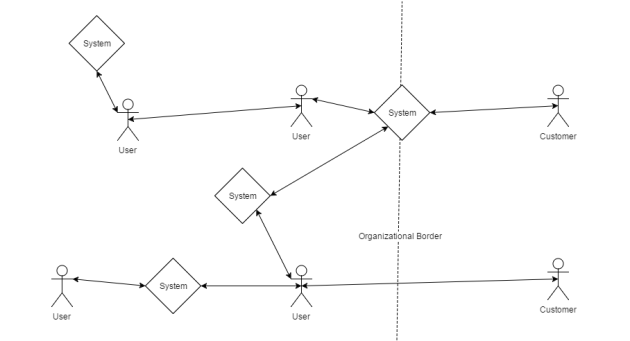

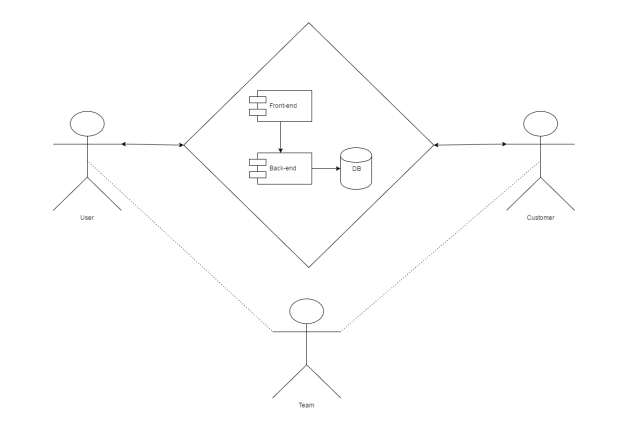

There is a separation between the developer role and software architect role, but it needs to be clearly understood as a difference in focus. A developer’s focus is more vertical, concentrating on implementing functionality while maintaining/improving/not harming the quality of service (aka the horrendously misnamed “non-functional”) aspects of the system. A software architect’s focus should be more horizontal, concentrating on the architecturally significant quality of service aspects that will enable the system to meet the needs of its stakeholders (or, at least, not unreasonably prevent the system from doing so).

The difference between the roles is a matter of thinking at different levels of abstraction, not of one being superior to the other. The two mindsets are both necessary and complementary. A high-quality architectural design without quality implementation cannot produce a high-quality system. Likewise, where there is implementation without architectural coordination, the quality of the resulting system is a matter of chance. For very small systems maintained by small, permanent teams, it may not be necessary for these roles to be distinct. In my experience, however, dealing with both the broadly general and the very specific at the same time scales poorly. Non-trivial systems quickly come to require architectural leadership, otherwise people are left fixing problems caused by problems fixed previously.

Leadership has been the main theme of almost all of my posts this month and has figured prominently in several of what’s been written this year. Many of these posts are assigned to the “Architectural Practice” category. This is because I absolutely believe that the software architect role is a leadership role. That being said, I don’t consider the Taylorist model to be effective leadership practice. In fact, I consider it an anti-pattern.

I’ve long been an advocate of a more collaborative model of practice. Rather than dictating, the software architect’s role should be one of consensus building and communication. Significant architectural disagreement indicates an issue with one or more members of the team. Significant uncertainty about the architecture indicates an issue with the software architect role.

Effective systems require both breadth and depth of effectiveness. This holds true for software systems as much as social systems. For the social systems producing software systems for use by other social systems (i.e. software development teams), it is imperative.